ChatGPT Security Risks You Need to Know About

Key takeaways

- Is chatgpt dangerous, or is chatgpt secure for corporate usage? Learn about the main risks associated with GPT chat, from the use of chat by fraudsters to the possibility of leaking confidential data.

- It’s not just developers that can affect the security of your company’s data but your employees, too. Discover how to create an impeccable enterprise security policy for chatGPT usage, train your personnel to use chat GPT safely, and integrate best security practices.

- Robust security measures can prevent many of the threats associated with chatGPT. Get valuable tips on mitigating chatgpt risks and determine which experts can help you.

ChatGPT has organically entered our daily and working life, simplifying and automating various tasks. Many companies have started to implement chatGPT to manage their work processes, such as customer support, sending emails, and simplifying the creation of content. However, its convenience hides a pressing problem 一 the threat to the cybersecurity of the modern workplace. Especially taking into consideration the recent lawsuit that was filed against OpenAI, alleging that the company stole “vast amounts of personal data” to train ChatGPT, the knowledge of this AI-driven technology’s capabilities and ChatGPT security risks it may pose is critical.

On top of that, this and other similar cases raise the question of whether or not it’s even safe to implement ChatGPT into your workflows since a number of companies, including Amazon, have banned their employees from using the tool. Should we be afraid of this technology or consider efficient ways to mitigate the risks to keep benefiting from this tool? In this article, we endeavor to uncover the dangers of chat gpt and shed light on ways to address them so that your company uses it safely.

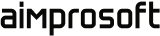

Security risks associated with ChatGPT

ChatGPT has undeniably revolutionized the way we interact with AI-powered technology. However, it is essential to realize that despite the fact that this chatbot has numerous in-built security measures, it can cause some major chat gpt security threats. In this section, we look at the potential threats ChatGPT poses, including the risk of creating content that can be used for malicious purposes, from phishing and social engineering attacks to concerns about data disclosure and unique vulnerabilities that arise when interacting with APIs. Our goal is to give you a practical understanding of the security risks associated with ChatGPT because forewarned is forearmed.

Security risks associated with ChatGPT

Compromised data confidentiality

Although ChatGPT has safeguards in place to avoid the extraction of personal and sensitive data, the possibility of inadvertent disclosure of private information remains a concern. Any information entered into ChatGPT becomes publicly available as it is stored on OpenAI servers to train machine learning models.

If employees enter sensitive company data, such as customer information or internal documents, it poses a privacy threat. For example, when Samsung employees inadvertently entered proprietary source code and meeting notes into ChatGPT, they unknowingly transferred the intellectual property to competitors and third parties. A data breach can lead to the disclosure of personal information that can be used for malicious purposes, such as identity theft or fraud.

Bias in training data

One of the most important ethical chatgpt security issues is the presence of bias in the training data. ChatGPT is trained on a large corpus of texts from the Internet, which contains tendencies present in human language. This can cause the chatbot to produce responses that reflect or propagate gender, racial, and other biases. For example, ChatGPT has been found to generate inappropriate or offensive content when presented with biased prompts. Biased responses can perpetuate stereotypes and discrimination, negatively impacting users and potentially harming individuals or communities.

Phishing and social engineering attacks

The harm in using AI, particularly models like GPT, for phishing attacks is significant. AI-powered tactics make cyberattacks more sophisticated and complex to detect, posing a rising danger to individuals and organizations. Phishing attacks, one of the types of social engineering attacks, a common cybercrime, are particularly concerning when AI is involved.

AI can generate highly convincing phishing emails and messages, often indistinguishable from genuine ones. This can lead to employees unknowingly downloading files or clicking links that contain malware or sharing sensitive personal information, such as login credentials and financial details, with cybercriminals. As a result, these attacks can lead to data breaches, financial losses, identity theft, business disruption, and reputational damage.

API attacks

Attacks on APIs in the context of artificial intelligence, such as ChatGPT, pose significant security risks. API interactions are often an integral part of AI systems. Attackers can exploit vulnerabilities in these APIs to gain unauthorized access, compromise data, or disrupt chatbot functionality.

Malicious code generation

The usage of ChatGPT in producing malicious code raises significant security concerns. It enables the rapid creation of malicious code, potentially allowing attackers to outpace security measures. Moreover, chatgpt security implications can be used to produce obfuscated code, making it harder for security analysts to detect and comprehend malicious activities. It also lowers the barrier for entry to novice hackers, enabling them to create malicious code without deep technical expertise. Additionally, ChatGPT-generated code could be rapidly modified to evade traditional antivirus software and signature-based detection systems.

Fraudulent services

If your employees use the ChatGPT chatbot or its alternatives for work, it is essential to remember that the following risks may be associated with it if it’s used for malicious purposes. Attackers use ChatGPT to create deceptive applications and platforms that impersonate ChatGPT or promise free and uninterrupted access to its features. Such fraudulent services come in various forms, including:

- Offering free access

Attackers create apps and services that claim to provide uninterrupted and free access to ChatGPT. Some go as far as creating fake websites and apps that mimic ChatGPT. Unsuspecting users can fall for these deceptive offers, putting their data and devices at risk.

- Information theft

ChatGPT scam apps are often designed to collect users’ sensitive information, such as credit card numbers, credentials, or personal data stored on their devices, including contact lists, call logs, and files. The stolen information can be used for identity theft, financial fraud, or other illegal activities.

- Malware deployment

Fraudulent applications can install additional malware, including remote access tools and ransomware, on users’ devices. Infected devices can become part of a botnet and be used for further cyberattacks, amplifying the security threat.

The above-mentioned security threats associated with chatGPT can be alarming. Especially considering the fact that employees can often put the safety of company data by not using chatGPT responsibly. However, such problems can be solved by conducting proper security training for employees on how to use it without the risk of jeopardizing the company’s reputation. Below, we’ll look at some critical employee education tips for keeping your company’s workflows secure.

Armed with security insights on this popular chatbot, learn how you can use GPT safely to streamline your business operations.

Secure use of chatGPT: the user’s role

Approximately 50% of HR experts, as reported by Gartner, are actively creating guidelines about their employees’ usage of ChatGPT. It’s related to the increasing number of individuals who have openly acknowledged using the chatbot in a workplace setting.

A survey conducted by Fishbowl indicates that 43% of professionals in the workforce have utilized AI tools such as ChatGPT to accomplish job-related tasks. Interestingly, over 66% of the survey participants had not informed their employers about using these tools. The rising rates of ChatGPT usage by employees means that it is critical for companies to incorporate training and security policies for chatbot usage to ensure their employees can use chatGPT competently.

In a professional context, users’ role is crucial to ensure the safe and responsible use of AI-based chatbots such as chatGPT. Inputting sensitive third-party or internal corporate data into ChatGPT could lead to incorporating that data into the chatbot’s dataset. Subsequently, this information may be accessible to others who pose relevant queries, potentially causing a data breach.

Therefore, educating employees about AI-based chatbots is paramount and can save your business from unwanted consequences such as data leakage. While some companies, such as JPMorgan Chase and Amazon, have banned employees from using ChatGPT, we want to show how you can train and inform employees on the competent use of ChatGPT to keep benefiting from it.

Tips on how to ensure employees use chatGPT safely

1. Usage of VPN

Workers are expected to use a Virtual Private Network (VPN) when interacting with ChatGPT. A VPN can help protect your data and online activities by encrypting your connection and masking your IP address, making it harder for third parties to intercept your data.

2. Account protection

Employees should regularly change their passwords and follow good security practices for their ChatGPT accounts. Strong, unique passwords and two-factor authentication can help reduce the risk of unauthorized access and data breaches.

3. Protecting confidential information

Employees should refrain from sharing confidential or sensitive corporate information with AI bots. This includes customer data, financial documents, passwords, and proprietary company information.

4. Usage of secure channels

Employees should ensure they use secure communication channels when interacting with AI bots. Public Wi-Fi networks should be avoided when interacting with bots. Using company-approved networks and communications helps ensure data security.

5. Regular software updates

It is incumbent on employees to regularly update the software on their devices, including the AI bot app. Updates often include patches that address known vulnerabilities and protect against potential threats.

6. Knowledge of anti-phishing practices

Malicious actors can use AI bots to distribute phishing links or messages. Employees should be wary of unsolicited links or attachments and not click on them. Instead, suspicious messages should be reported to the IT department.

7. Logout after ChatGPT usage

If the AI bot platform allows employees to log out, staff should do so after completing the interaction. Logging out prevents unauthorized access if the device is turned in.

8. Reporting suspicious activity

Suppose employees notice unusual or suspicious AI bot behavior, such as requests for sensitive information or unexpected actions. In that case, they should immediately report it to the IT department for investigation and appropriate action.

Responsible usage of AI-driven technology and employee security training plays a pivotal role in ensuring the safety of your business. However, to ensure secure harnessing of the full potential of chatbots, it’s even more crucial to take care of the strength of the company’s security not only by educating your personnel. Thus, let’s talk about the technical part of reinforcing security before using chatGPT and examine which experts will help you implement all these important measures.

Enhancing your security: specialists to engage and strategy to apply

Despite all the security threats that ChatGPT poses, the devil is not so black as he is painted since any threat can be prevented and overcome with the right team and comprehensive security strategy.

As companies increasingly use chatGPT to improve their workflows, the responsibility of developers to securely integrate this technology becomes paramount. The potential GPT chatbot security risks falling into the wrong hands, whether by malicious actors or unintentional actions of employees, emphasize the importance of taking robust security measures. In this section, we offer you to look at what specialists you need and how they can proactively address these issues and ensure that GPTchat is used responsibly and securely in the corporate environment.

Which experts can help you implement security measures to use chatGPT safely?

DevSecOps specialist

DevSecOps specialist is responsible for integrating security measures into the software development and delivery process.

Responsibilities:

- Automating security testing, code analysis, and compliance checks within the DevOps pipeline.

- Focusing on identifying and mitigating security vulnerabilities early in the development cycle.

- Aiming to create a security culture within the development and operations teams.

At what stages is it worth hiring this professional?

DevSecOps specialists should be integrated into the development process from the beginning. They focus on embedding security into DevOps practices, so hiring them early ensures security is a core part of your software development.

DevOps specialist

DevOps engineer is a specialist focused on streamlining collaboration and communication between software development and IT operations teams.

Responsibilities

- Automating the deployment and monitoring of security measures, although it’s not primarily a security role.

- Streamlining software development, testing, and deployment processes to increase efficiency and reduce security risks.

At what stages is it worth hiring this professional?

DevOps specialists are helpful at all stages of the software development lifecycle. They monitor and help optimize the development, testing, and deployment processes for engineers responsible for security.

Penetration tester

A penetration tester, or ethical hacker, identifies vulnerabilities and weaknesses in computer systems and networks.

Responsibilities:

- Conducting controlled and authorized cyberattacks to test an organization’s security measures.

- Providing detailed reports on vulnerabilities found and recommendations for improving security.

- Prevent real cyberattacks by addressing vulnerabilities before malicious hackers exploit them.

At what stages is it worth hiring this professional?

Penetration testers are typically brought in during the development and implementation stages of applications or systems. They help identify vulnerabilities and weaknesses in the organization’s security posture.

Cybersecurity engineer

Cybersecurity engineers protect an organization’s computer systems and networks from security breaches and cyberattacks.

Responsibilities:

- Design and implement security measures, such as firewalls, encryption, and intrusion detection systems.

- Monitoring network traffic for unusual activities and responding to security incidents.

- Continuously updating security systems and staying informed about emerging threats.

At what stages is it worth hiring this professional?

Hire a cybersecurity engineer as early as possible, ideally during the initial stages of setting up an IT infrastructure. They are responsible for securing systems and networks, making their expertise crucial from the outset.

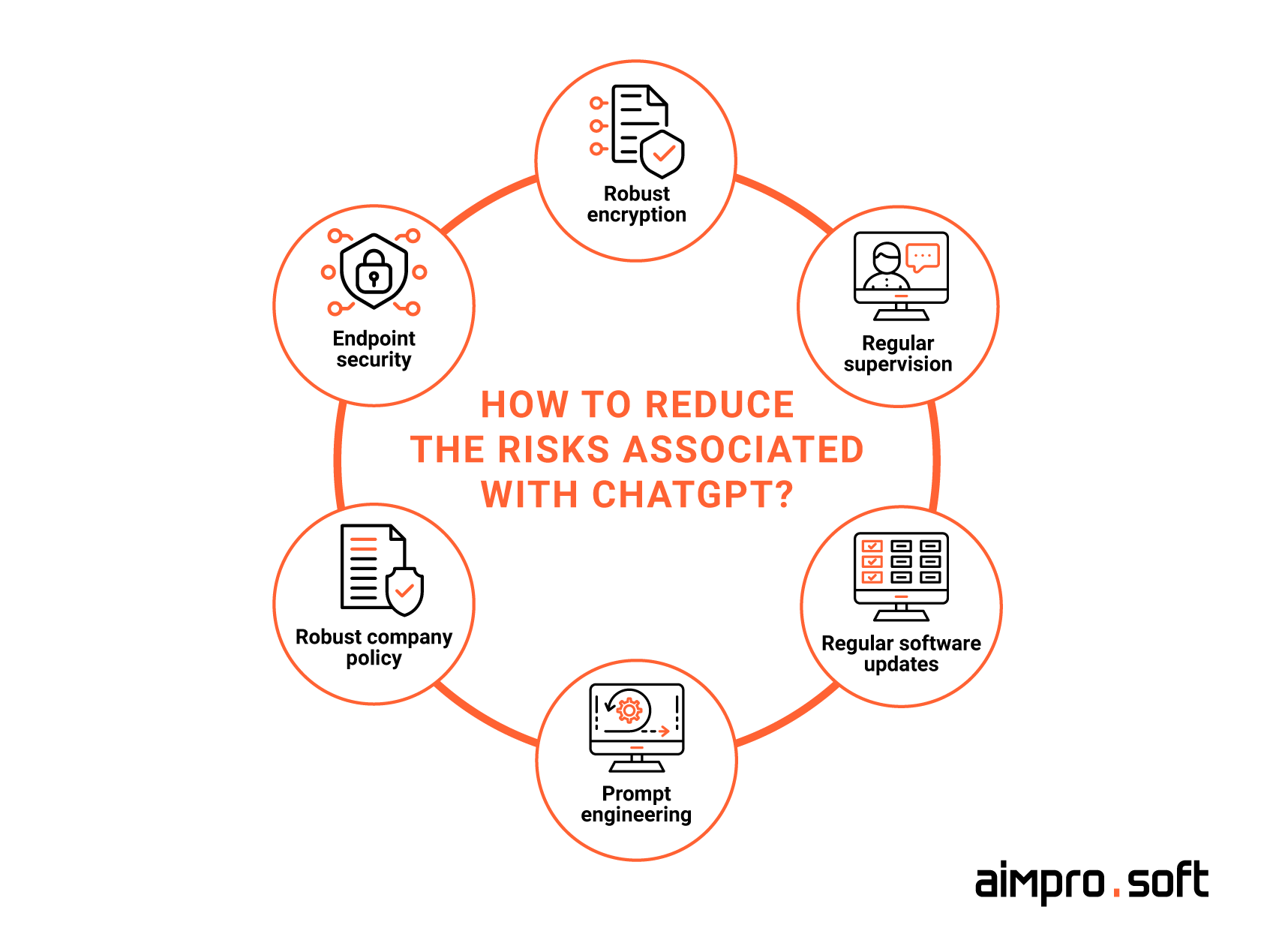

Mitigating ChatGPT security risks: the developer’s role

Measures to help mitigate security risks associated with ChatGPT

1. Encryption

All communications between ChatGPT and users must be encrypted to prevent eavesdropping and interception. This can be achieved using SSL/TLS End-to-End Encryption, IPsec, SSH, PGP, HTTPS, WPA3, or other encryption protocols. Such protocols provide encryption and authentication, ensuring privacy and protection against unauthorized access to data transmitted between the user’s device and ChatGPT.

2. Installing endpoint

Installing robust endpoint software is a crucial element of eliminating chatgpt cybersecurity risk. This software safeguards individual devices against various threats, including malware, viruses, and ransomware. It excels at defending against brute-force attacks, where cybercriminals repeatedly guess usernames and passwords, and it’s especially effective at countering zero-day attacks that exploit vulnerabilities unknown to software vendors.

3. Regular supervision

Regularly monitoring ChatGPT operation is fundamental to its security. Such controls include monitoring chat logs to prevent unwanted content, tracking login attempts to detect unauthorized access, and promptly responding to user reports of suspicious activity. Adherence to established policies and guidelines and the flexibility to adjust algorithms to meet emerging threats is key to maintaining system integrity.

4. Speed limitation

Implementing speed or rate limiting is an effective strategy for safeguarding the ChatGPT API. Rate limiting allows administrators to control the pace at which users can access the API, ensuring it’s used responsibly and minimizing the potential for misuse. This precautionary measure helps prevent denial of service attacks and mitigates various malicious activities. By defining rate limits, such as the number of requests a user can make within a specific timeframe, you can balance allowing legitimate usage and safeguarding the system’s stability.

5. Regular updates

Keeping software up-to-date is a fundamental practice for application security, especially when using artificial intelligence models such as GPT. Regular updates to the Chat GPT SDK and related packages are essential to ensure that potential security vulnerabilities are not missed. Outdated software can become a weak point in a system’s defenses, making it easier for attackers to exploit known vulnerabilities. By keeping your software up to date, you can access new features, improvements, and the necessary security patches to address any identified weaknesses.

6. Prompt engineering

Utilizing “prompt engineering” is a valuable technique for controlling the content and tone of ChatGPT’s responses. It is a preventive measure to reduce the likelihood of generating undesirable or inappropriate content, even when users attempt to elicit such responses. By offering the model more context, such as providing high-quality examples of the desired behavior before inputting a new query, you can effectively guide the model’s outputs in the intended direction. This proactive approach helps ensure ChatGPT meets your requirements and maintains a user-friendly and safe environment.

7. Creation of a robust company policy for ChatGPT usage

Given the risks of chatgpt, organizations should develop a comprehensive company policy. Rather than completely blocking employee access to ChatGPT, which can lead to shady use and increased risks, a more sensible approach is to create clear guidelines. These guidelines will enable employees to use ChatGPT responsibly, increasing efficiency and ensuring compliance with data protection legislation. Here are some of the steps to consider when drafting company policy:

- Definition of the purpose and scope: Clearly state the purpose of using ChatGPT in your organization and specify which departments or roles will use it.

- Data handling and privacy: Address data privacy concerns by explaining how the data collected and generated by ChatGPT will be handled and stored securely.

- Acceptable use cases: Identify appropriate and inappropriate uses of ChatGPT and set limits on their use.

- Monitoring and compliance: Describe how compliance with the policy will be monitored and specify consequences for violations.

- Updates and revision: Establish a procedure for periodically reviewing and updating the policy by changing circumstances.

- Employee feedback: Encourage employees to provide feedback on the policy for continuous improvement.

Proactively considering and understanding the potential risks associated with ChatGPT is a key aspect of managing them effectively. Implementing the strategies discussed in this section will enable risks to be successfully overcome and mitigated. It is important to emphasize that AI should be seen as a tool that enhances human capabilities rather than replacing them entirely. By combining the strengths of artificial intelligence and human control, you can optimize results and minimize potential problems, ensuring your business remains competitive in an era of technological advancement.

Contact us, and our experts will help you overcome the most common security challenges.

CONTACT USConclusion

The risks associated with ChatGPT security may not have seemed critical to you before but as you can see now they are real and require attention. While ChatGPT has undoubtedly made our lives easier, it has also created problems such as misinformation and data leakage, and others if used for malicious purposes. However, it is essential to realize that these problems are not insurmountable. Organizations can proactively work to solve these problems by improving access controls, incorporating employee security awareness training, and moderating security practices.

Teamwork, proactive measures, and accountability can significantly reduce most of the risks discussed in this article. If you still have concerns about the security of integrating ChatGPT in your business and have doubts about whether you can overcome the threats, you can always contact us. Our security experts can help you implement all the necessary measures.

FAQ

What are ChatGPT security risks?

Using ChatGPT presents various security risks. It can be misused to develop malware and facilitate phishing and social engineering attacks. Inadequate information control may lead to the exposure of sensitive data. Cybercriminals can spread false information, and privacy risks may arise if interactions are not adequately secured. ChatGPT can also impersonate individuals or organizations, complicating fraud detection.

Could data entered into ChatGPT be stolen or accessed by unauthorized individuals?

There is a risk that data entered into ChatGPT may be stolen or obtained by unauthorized persons. Inadequate security measures or improper handling of data may result in the information becoming available to third parties. When using ChatGPT, precautions should be taken to protect sensitive data.

How can the leakage of sensitive data through ChatGPT be prevented?

To prevent sensitive data from leaking through ChatGPT, take the following steps:

- Implement access controls: Restrict access to ChatGPT and use network security tools to control users.

- Encrypt data: Use strong encryption of transmitted data to enhance security.

- Browser security extensions: Use tools like LayerX to prevent copying and pasting data into ChatGPT.

- User training: Educate users on data risks and implement a company-wide data protection policy.

- Audit and monitoring: Regularly monitor ChatGPT usage to identify unauthorized access and possible data leakage.

How can ChatGPT be used for fraud or malicious activities?

ChatGPT can be used for fraud, such as malicious activities such as creating sophisticated phishing emails, conducting social engineering attacks, and exposing sensitive data, which increases the ChatGPT cybersecurity threat. In addition, attackers can also impersonate people or organizations, making it difficult to detect and combat fraud, creating severe security concerns.

What legislative measures and regulatory standards exist regarding AI and ChatGPT?

Legislative measures and regulatory standards regarding AI and ChatGPT vary by region. In the United States, AI is subject to existing laws, such as the Computer Fraud and Abuse Act and copyright laws. The European Union has the General Data Protection Regulation (GDPR) and is working on AI-specific regulations. Various countries are developing guidelines and standards to address AI’s ethical considerations and legal aspects. It’s essential to stay informed about evolving regulations and compliance requirements in your specific jurisdiction.